Cinelerra — the next step

a draft how the GUI could be structured

by Ichthyostega, 7.2.2008. yesterday, in my first response, I pointed out that Richard's drawing is quite similar to the structure I am implementing in the middle Layer of the upcoming application as a »high level model«.

Personally I care most for the normal editing workflow to be convenient, while at the same time having seamless access to compositioning possibilities. I think that's important: you can go from quick drafting to fine tuning within the same application, and you don't loose your fine tuning work if you have to adjust the basic structure of the edits.

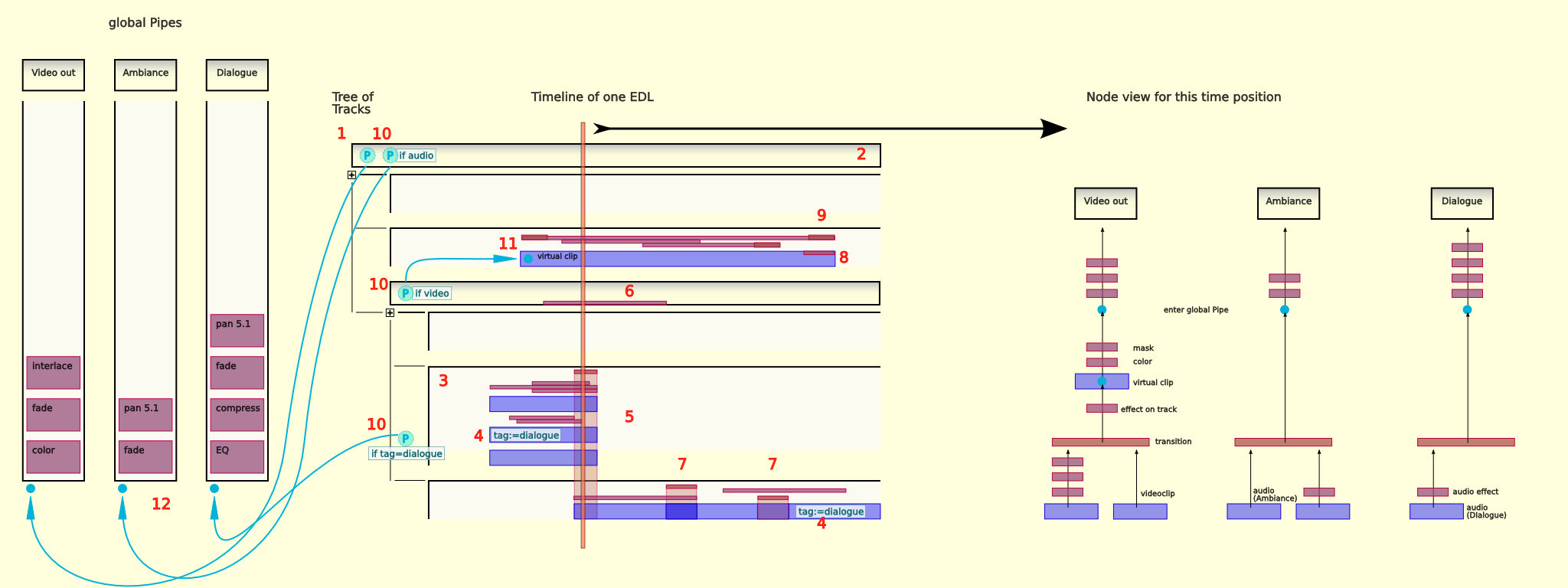

Now, while the Proc-Layer I am currently implementing shall be able to run without GUI, and while any GUI is allways free not to use all the possibilities provided by the Proc-Layer (and doing so could be a good start), I want to show how a GUI could eventually present the high level model maintained by the Proc-Layer. Please note, I choose a pretty much real world scenario, nothing arbitrarily wild or convoluted: A dialogue sequence with some transitions — and we need to do some retouching as a overlay.

For working with the individual clips and objects, we use the timeline view (actually we have N such views, because we have N EDLs). Besides, we have a section with global Pipelines, similar to hardware audio consoles. As a third view, we can select a node display for one specific time position Basically, that's the problem with the "node graph" aproach: it is not good in capturing temporal change...

Some details to note (see the red numbers in the drawing):

- Tracks are nothing but an organisational grid. They can be used to place objects. Tracks are organized into a tree.

- A Track group has an additional group bar, which can be used to define properties for all enclosed sub-Tracks. It is possible to display already rendered Frames from the cache on this group bar

- This track has been expanded to show all details of the clip: Clips are typically compound clips and contain several streams, like video and audio. This clip here has an additional audio stream containing already synched (maybe overdubbed) dialogue.

- it is possible to tag source material or individual clips at various levels. These tags can be used to setup default behaviour. In this example here, we tagged the dialogue sound takes and automatically route them to a separate Pipeline, so we can process and fade and pan-position the dialogue sound different then the ambiance.

- Effects are simply attached to the clips. The system should be clever enough not to add an audio effect in the video Pipe. If we want to apply an effect only to one specific stream of a clip, we can place the effect directly in the expanded view.

- we can place effects relative to some track as well (and use all possibilities of the "Placement" concept, e.g. we could additionally anchor the effect at some clip, so it follows the clip, but is applied to the track). Effects placed to tracks are inserted after the individual clip-Pipes, but before routing to the global Pipes.

- Transitions can be applied in any order, before or after the effects.

- we could consider to built in an convenience shortcut for adding a fade-in or fade-out, similar to what Ardour does (The fade is just another effect node, but it will be inserted prior to any other effects.)

- in a similar manner, we could add a convenience shortcut for fading-in/out any effect. We do this by creating a local crossfade between data processed and not processed by the effect node in question. This can save you in many cases the effort to keyframe the effect.

- A special form of "Placement" is the placement-to-a-output-Pipe. You can read it as "plug the output into this processing Pipe. Especially, we can attach such a placement at track level. Placements will be discovered by searching the context hierarchically. Placements can have conditions. In our example, the Routing information for the dialogue sound will be found using the tag "dialogue", while the normal sound searches up the track hierarchy.

- because tracks are implemented as local processing Pipes, we can create a virtual clip by plugging the video output into the input side of the clip's Pipe. (Normally, the system would feed here the output of the source readers and codecs). In our example, we use this to apply a mask. (Well — we could have done this directly to the track, but using the virtual clip, we create a new building block we can handle and move around and use like a real clip.

- finally the calculated data enters the global Pipes section, where we can do anything we could do to a individual clip.

The node view on the right side shows the (user visible, "high level") configuration for one single time position. We could even think of making this view fully editable, but doing so is anything but trivial.

comments, citique, proposals appreciated...

Hermann